Deep Scattering: Rendering Atmospheric Clouds with Radiance-Predicting Neural Networks

We present a technique for efficiently synthesizing images of atmospheric clouds using a combination of Monte Carlo integration and neural networks. The intricacies of Lorenz-Mie scattering and the high albedo of cloud-forming aerosols make rendering of clouds – e.g. the characteristic silverlining and the ‘ whiteness’ of the inner body – challenging for methods based solely on Monte Carlo integration or diffusion theory. We approach the problem differently. Instead of simulating all light transport during rendering, we pre-learn the spatial and directional distribution of radiant flux from tens of cloud exemplars. To render a new scene, we sample visible points of the cloud and, for each, extract a hierarchical 3D descriptor of the cloud geometry with respect to the shading location and the light source. The descriptor is input to a deep neural network that predicts the radiance function for each shading configuration. We make the key observation that feeding the hierarchical descriptor into the network progressively enhances the network's ability to learn faster and predict with high accuracy but fewer coefficients. We also employ a block design with residual connections to further improve performance. A GPU implementation of our method runs at interactive rates and synthesizes images of clouds that are nearly indistinguishable from the reference solution. Our method thus represents a viable solution for applications such as cloud design and, thanks to its temporal stability, also for high-quality production of animated content.

Introduction

We visualize the importance of multiple-scattering when rendering clouds in our first comparison. The appareance of different phase functions and our chopped Lorenz-Mie phase function are visualized in the second comparison:

Training data

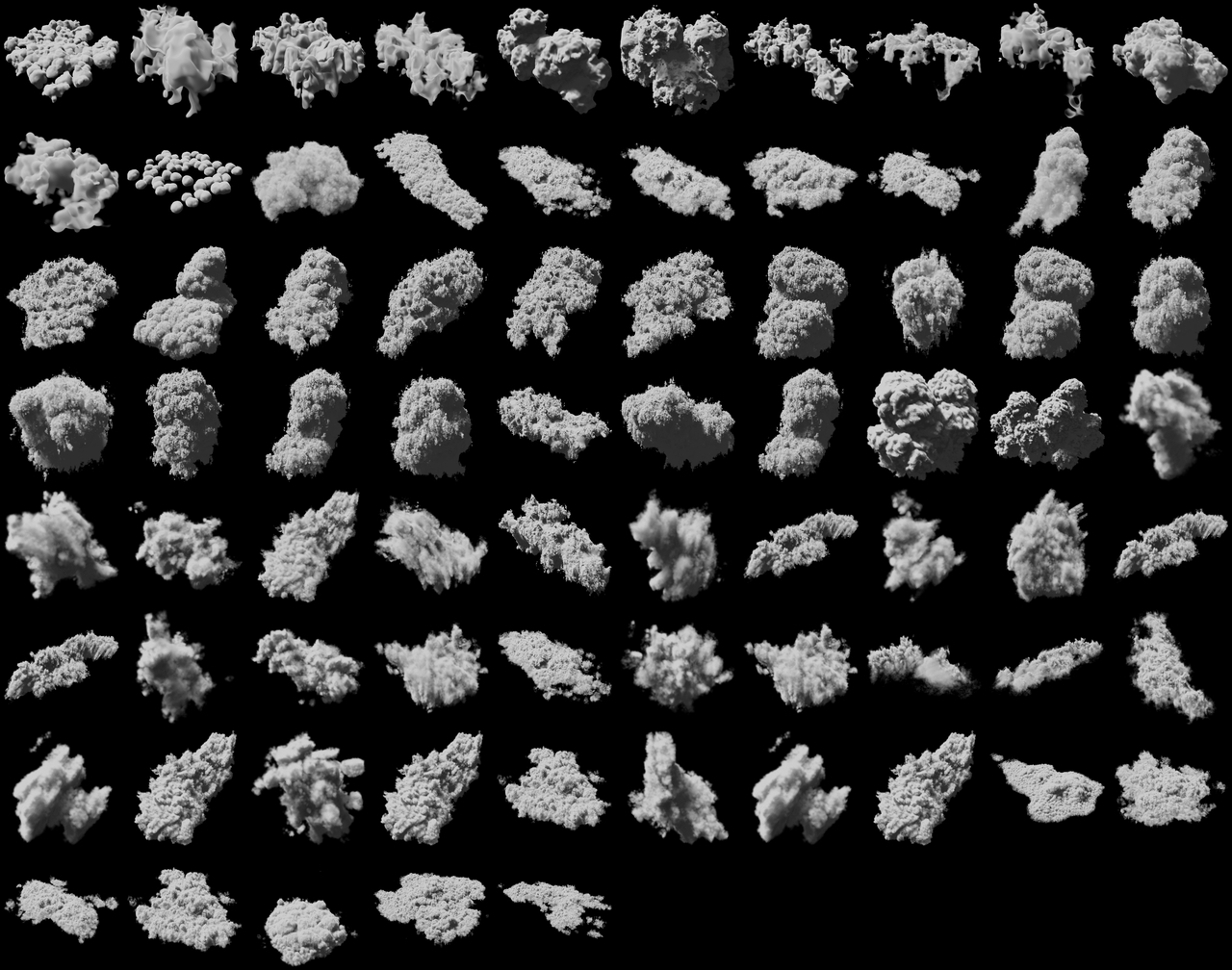

We train, validate, and test our networks on a mix of 75 procedurally generated, simulated, and artist-created clouds represented by voxel grids with resolutions within [100-1200]^3. The following shows an overview of the density grids we used for training and validation:

Evaluation

To assess the quality of our method, we evaluate it on the remaining 5 clouds: